Organizations rely heavily on data and intelligence to make critical decisions that impact operational safety, security, brand reputation, and business strategy.

However, the mere availability of data is not enough – stakeholders must have appropriate trust in the data and intelligence they consume. Trust and transparency are the cornerstones that empower threat, security, and risk analysts to deliver actionable insights that decision-makers can confidently rely upon.

While the concept of "data" may seem straightforward, there is no universal definition that encompasses its various forms and applications. To some, data refers strictly to quantitative facts and figures, while others consider any piece of information, whether structured statistics or unstructured text, as valuable data input. Regardless of how data is defined, what ultimately matters is whether an organization can establish trust in the data it uses to produce intelligence assessments and drive informed decision-making.

Building trust in data is inextricably linked to transparency – understanding where data originated, the methodologies used to collect and process it, the assumptions built into any analytical models, and the potential limitations of the data. Without transparency, stakeholders lack the contextual understanding necessary to gauge the reliability and relevance of the intelligence presented to them.

This ebook explores the key factors and best practices for establishing trust and transparency in data, drawing upon insights from seasoned security and intelligence professionals. It delves into the intelligence cycle, examining the critical role of organizational culture in fostering an environment conducive to transparency.

Additionally, it addresses the pervasive threats of misinformation and disinformation, which can erode trust in data and intelligence if left unchecked. It also underscores the enduring need for human oversight and expertise in the intelligence process. While cutting-edge technologies like artificial intelligence and machine learning offer powerful analytical capabilities, human analysts bring invaluable contextual understanding, critical thinking, and the ability to interpret results within the reality of the world. Their involvement is crucial for mitigating biases, identifying blind spots, and ensuring that the intelligence products align with organizational objectives and societal values.

By exploring these key elements, this ebook provides key insights for security, risk, and threat professionals seeking to cultivate trust and transparency in data and intelligence.

Nurturing Trust �and Transparency: �Key Practices for Data-Driven Decision Making

The Intelligence Cycle

Data alone does not constitute intelligence. According to Gartner, threat intelligence is evidence-based knowledge, that includes context, mechanisms, indicators, implications and actionable advice, about an existing or emerging hazard to assets that can be used to inform decisions regarding the subject’s response to that hazard. Intelligence is a cyclical process. Whatever process your organization uses for the intelligence cycle, the ability to make strategic decisions for the organization should be the goal. In general, you gather all your information, you process it, decide what data or information is relevant to the question, and then you use that data to perform analysis. A typical intelligence cycle involves:

Defining Trust and Transparency

Trust in data and intelligence is pivotal for informed decision-making, especially in high-stakes environments where the consequences can be far-reaching. Trust means having greater confidence that the data and intelligence provided can inform decisions within the specific context and risk tolerance. Transparency, on the other hand, offers visibility into the origins, methodologies, and limitations of data and intelligence, thereby fostering trust.

While the correlation between transparency and trust may seem intuitive to security and intelligence teams, the rise of artificial intelligence (AI), particularly large language model generative AI, social media platforms, and the vast amounts of data they generate, has amplified the need for heightened scrutiny.

In this data-rich landscape, critical questions arise: Can the data be believed? Is it accurate? Are the sources trustworthy? Are there inherent biases or potential disinformation present? Stakeholders responsible for making critical, potentially life-altering decisions for their organizations must have an unwavering confidence in the information they rely upon.

�The intelligence cycle outlines specific processes and methods for gathering, analyzing, and disseminating intelligence. However, fostering transparency and trust extends beyond these established procedures and relies on additional factors, such as the organization's maturity, culture, and risk tolerance. These elements profoundly influence how effectively transparency and trust are cultivated within the organization.���

Organizational Maturity and Culture

While transparency enables trust in data, organizational culture is a critical factor in whether that trust gets established. Organizational maturity in using data and intelligence processes varies widely, regardless of the size of the organization and the resources at its disposal. The development of a security operations center hinges on proficiency in diverse skills. This includes the technology and roles utilized, the benchmarks for gauging performance, the degree of collaboration and communication within the team and the larger organization, and the established processes and procedures. Having the most advanced techonology doesn’t necessarily mean the security or intelligence teams are mature. The lack of certain key facets of maturity, particularly metrics for success measurement and well-defined processes, can affect the transparency of intelligence and the trust in that data.

In immature environments, politics, misaligned incentives, and lack of cross-functional collaboration can override even highly transparent, trustworthy data. Cultivating a culture of trust requires:

���

Misinformation, Disinformation and Emerging Threats

The spread of misinformation, disinformation, and emerging threats like generative AI models pose significant challenges to establishing data transparency and trust. This underscores the importance of verifying information across multiple sources and maintaining human oversight, even with advanced technologies. Stakeholders demand transparency into the sourcing of data to assess its credibility and potential exposure to coordinated disinformation campaigns.

�A prime example is an emerging incident like a drone strike near an organization's facility. Initially, stakeholders may have an increased tolerance for uncertainty around attributing responsibility, but their primary concern is the reporting on the incident itself – its source, veracity, and significance. However, as time progresses, the need for accurate information on the actor responsible becomes critical to avoid misinformation. Time sensitivity and evolving situational awareness necessitate robust verification mechanisms.��

Clearly communicating the "why" behind data collection and analysis�

Demonstrating the value of intelligence products through relevance to business decisions, as well as impactful use of past products (success stories)

Establishing robust processes for vetting sourcing and methodologies

Risk tolerance and the types of data that can be leveraged play a role in the decision making of an organization. Industries with strict governance structures or dependencies may alter how data is used and impact decision-making processes. Conversely, certain sectors may demand high levels of confidence in data before initiating actions. These dynamics affect transparency and the volume and type of data necessary to instill confidence among stakeholders.

The Human Factor

Despite the promise of artificial intelligence and machine learning models to automate data processing and analysis, human oversight remains critical. Machines cannot develop an innate understanding of context and risk tolerance. Additionally, the machines do not possess the common sense or real-world understanding needed to frame the questions and then execute based on the answer. The Defense Advanced Research Project’s Agency’s (DARPA) work on Machine Common Sense finds,

������

“The absence of common sense prevents intelligent systems from understanding their world, behaving reasonably in unforeseen situations, communicating naturally with people, and learning from new experiences. Its absence is considered the most significant barrier between the narrowly focused AI applications of today and the more general, human-like AI systems hoped for in the future.”

In addition to the lack of common sense outlined by DARPA, there are other areas to consider that emphasize the importance of humans in the loop to build or enhance the trustworthiness of the information, particularly in the areas of:

Key Practices �To overcome the challenges of trust and transparency in data, especially in the face of emerging threats such as misinformation, disinformation, and the proliferation of generative AI, organizations must adopt a multifaceted approach. Several approaches can be employed:

�

Conclusion

Establishing trust and transparency in data is an ongoing process that requires a commitment to organizational maturity. Even with highly transparent data pipelines, cultural barriers like siloed functions, misaligned priorities and poor cross-team collaboration can still undermine trust.

The enduring role of human oversight, guided by ethical principles and risk-based framing, remains essential as organizations navigate ever-increasing streams of data, exposures to misinformation/disinformation, and the proliferation of AI/ML technologies. As AI technology continues to evolve, it is imperative that organizations remain vigilant in their pursuit of trustworthy and transparent data sources, ensuring that the benefits of data-driven innovation are realized without compromising integrity or reliability.

Strong security leadership with a focus on robust processes and clear communication of purpose are critical for any organization to cultivate the vital seeds of trust and transparency. In that environment, data and intelligence can flourish into more confident decision making when it matters most. Without the elements that build trust and transparency for stakeholders, data is just noise.��

���

The intelligence cycle, with its distinct stages, serves as a guiding framework for companies to analyze the quality and accuracy of data, assess its impact on the business, and formulate actionable recommendations for key stakeholders. By systematically navigating through planning, collection, processing, analysis, and dissemination, organizations not only scrutinize data but also gain insights into its potential risks and benefits.

One key aspect of a sophisticated intelligence cycle is that transparency about the quality of sources informs the confidence level of the analysis. This then informs how that assessment can be factored into the complexities of decision making when conditions are uncertain. At its core, this systematic foundation of transparency and accountability is essential for establishing trust in any organizational setting, particularly when it comes to sensitive data and strategic decision-making processes. But, trust doesn't solely come from having a structure and process. Many other elements are at play at different steps of the intelligence cycle.

Planning and direction

Defining the key questions or decision needs

1

Collection

Gathering relevant data inputs across sources

2

3

4

5

Processing

Normalizing, structuring, and verifying the data

Analysis

Producing an intelligence assessment from the processed data

Dissemination

Communicating the intelligence assessment

Promote awareness and education regarding AI technology and its applications, demystifying AI processes and methodologies to foster greater trust and confidence in machine-generated data.

Invest in the development of advanced technological tools, that leverage capabilities like machine learning algorithms, to improve data quality and reliability. These tools can be used to identify and mitigate potential biases, anomalies, or inconsistencies within the data, enhancing its credibility and trustworthiness.

Implement robust mechanisms to integrate diverse data sources and employ rigorous verification mechanisms. Cross-referencing multiple independent sources, leveraging subject matter expertise, and employing advanced analytical techniques can enhance the credibility of machine-generated content and mitigate the risk of relying on compromised or biased data.

Foster collaboration between human expertise and machine intelligence, leveraging the strengths of both – machines to manage the volume of data and humans to ensure answers reflect common sense and reality – to mitigate risks associated with data manipulation and ensure the integrity of information.

Contextual Understanding and Nuanced Interpretation�Human analysts bring invaluable contextual understanding and the ability to interpret nuanced information. They can contextualize the data within the broader socio-political, cultural, and historical landscapes, enabling a more comprehensive and holistic analysis.

Ethical and Moral Considerations�Human analysts play a crucial role in ensuring that data analysis and intelligence operations adhere to ethical and moral principles. They can identify potential biases, privacy concerns, or unintended consequences that may arise from the use of AI/ML models and make necessary adjustments to align with organizational values or even regional or local norms.

Establish clear data provenance and lineage and create a comprehensive understanding of this information. By implementing robust mechanisms to track the origins of data, the transformations it undergoes throughout its lifecycle, and the various processes it is subjected to, this transparency enables stakeholders to identify potential points of compromise or manipulation, assess the data's integrity, and make informed decisions regarding its trustworthiness.

Recognize that the landscape of data and information is constantly evolving. Adopt a mindset of continuous monitoring and adaptation to stay ahead of emerging threats and evolving adversarial strategies. Regularly review and update data sources, verification methods, and analytical approaches to ensure their effectiveness in the face of new challenges.

Cultivate a culture of data literacy and critical thinking within organizations. Provide comprehensive training to stakeholders on data analysis, interpretation, and critical thinking skills. This empowers them to make informed decisions based on reliable data while maintaining a vigilant stance against potential misinformation, disinformation, or other forms of deception.

By seamlessly integrating human expertise with the power of AI and ML technologies, organizations can leverage the strengths of both components, fostering an environment of trust, transparency, and effective decision-making. The human factor remains indispensable in the realm of data analysis and intelligence, ensuring that technological advancements are harnessed responsibly and in alignment with organizational objectives and societal values.�

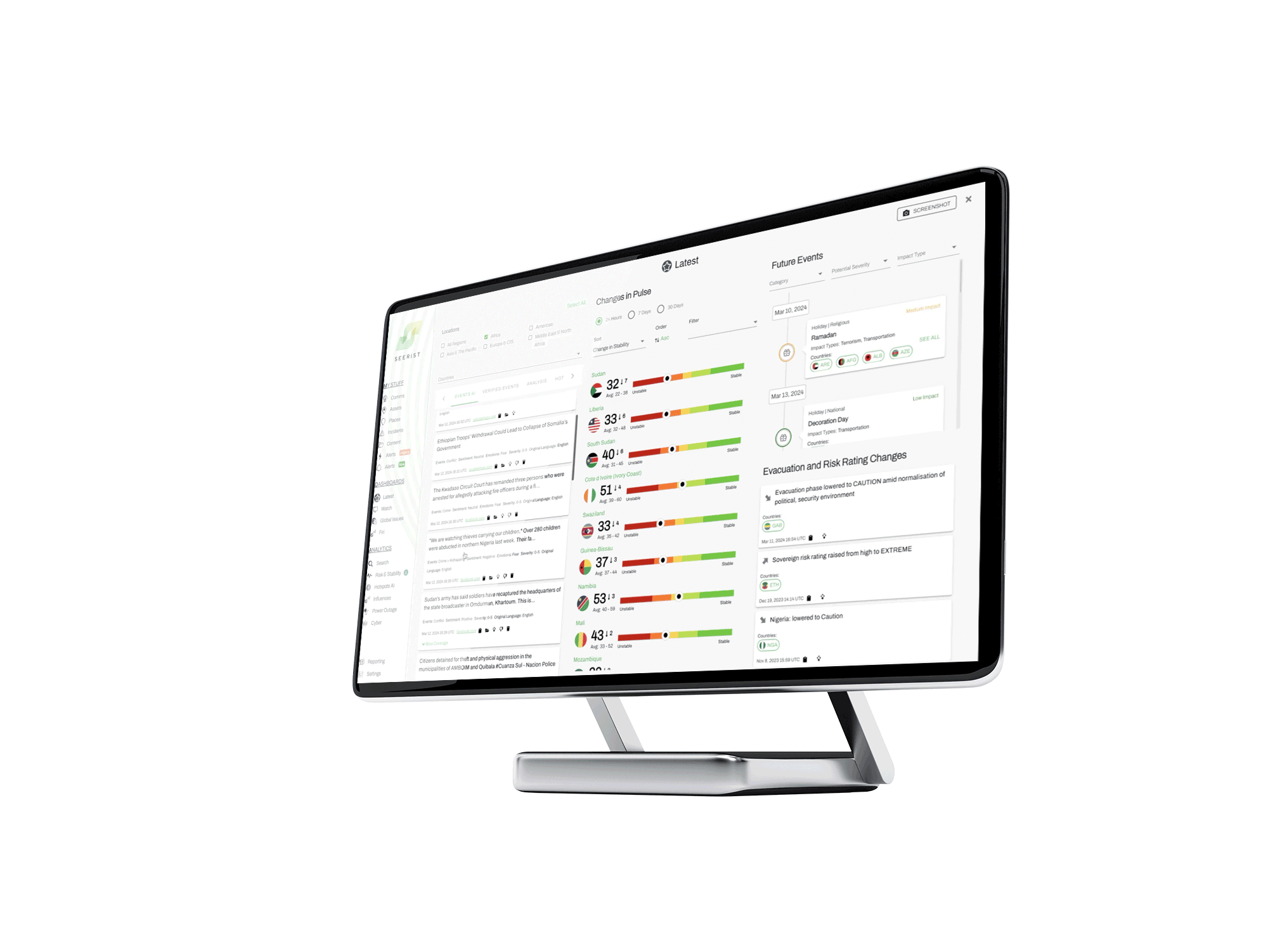

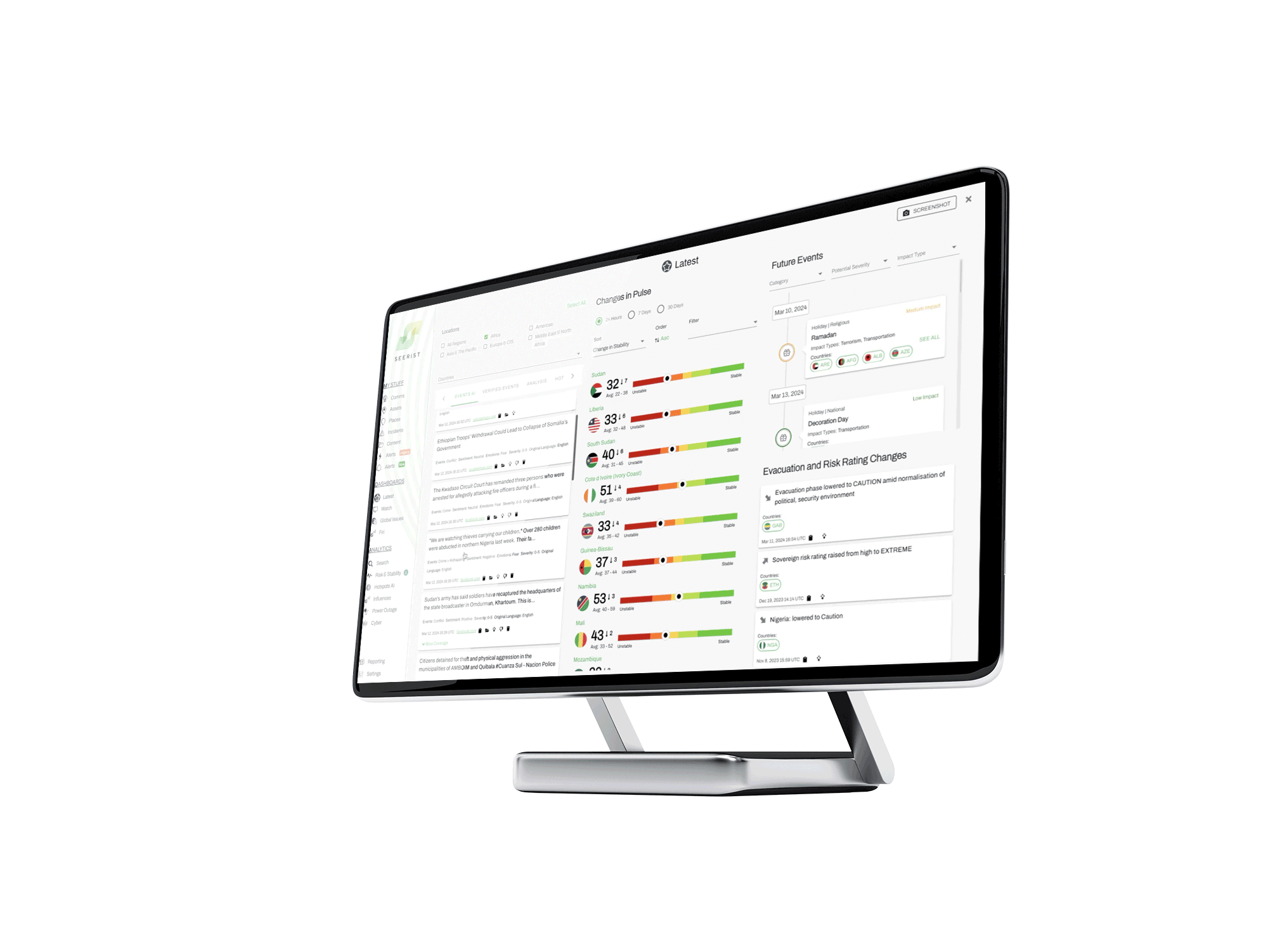

Take a look at these additional resources to learn more about cultivating greater trust and confidence in data and see how Seerist can help.

Read the Blog

Watch the Video

Explore the Solution

Insightful Information Made Actionable

Seerist Provides Risk and Threat Intelligence You Can Trust

The Trust Factor in AI-driven OSINT Analysis

In a survey of directors and C-suite executives across 30 countries, Deloitte Global Boardroom Program found that: "94% of board members and top management agree that trust is essential to their organisation's performance." Further, "About two-thirds of respondents say their organisation approaches trust proactively and that trust is built into their ongoing operations. The remaining one-third of respondents report a more reactive stance: Eight out of ten say they have no consistent approach, and approximately one out of ten only prioritise trust in the wake of a crisis."

94%

agree trust is essential.

94%

agree trust is essential.

The more quickly someone does all of these things, the more quickly leaders can come to an accurate conclusion, the better they can make more informed decisions at pace. This reinforces the importance of rapidly verifying information and avoiding misinformation, as it is crucial for enabling informed, timely decision-making in high-stakes scenarios where inaccurate data can have severe consequences.

�While misinformation/disinformation risks cannot be entirely eliminated, implementing robust processes for transparency around sourcing, clear documentation of methodologies, and layering in human quality assurance builds resilience. By fostering an environment of trust and transparency, organizations can better navigate the complex landscape of data threats and make decisions with confidence.��